I am a postdoctoral research associate at the

Hamburg Observatory.

Previously, I was a

Marie Skłodowska-Curie Actions Postdoctoral Fellow at the same institute and

a postdoctoral research associate in the

Department of Physics & Astronomy

at

Michigan State University

.

In general, my interdisciplinary research covers both physics, e.g., (astrophysical) plasma modeling including magnetohydrodynamic processes and their role in (astro)physical systems, and computer science, e.g., parallelization and high performance computing, as well as topics in between such as computational fluid dynamics and numerical methods. Currently, I am working on analyzing and characterizing energy transfer in compressible MHD turbulence, understanding driving and feedback mechanisms in astrophysical systems, and performance portable programming models for exascale computing.

In general, my interdisciplinary research covers both physics, e.g., (astrophysical) plasma modeling including magnetohydrodynamic processes and their role in (astro)physical systems, and computer science, e.g., parallelization and high performance computing, as well as topics in between such as computational fluid dynamics and numerical methods. Currently, I am working on analyzing and characterizing energy transfer in compressible MHD turbulence, understanding driving and feedback mechanisms in astrophysical systems, and performance portable programming models for exascale computing.

Contact information

Funding

My current research is funded by the Deutsche Forschungsgemeinschaft

(DFG, German Research

Foundation) — 555983577.

Part of my previous research was supported by the European Union’s Horizon 2020 research and innovation programme under the Marie Skłodowska-Curie grant agreement No 101030214.

| Office | Room: 105, Direktorenvilla |

| pgrete [at] hs.uni-hamburg.de | |

| Phone | +49 40 42838 8536 |

| Address |

Hamburg Observatory University of Hamburg Gojenbergsweg 112 21029 Hamburg |

Part of my previous research was supported by the European Union’s Horizon 2020 research and innovation programme under the Marie Skłodowska-Curie grant agreement No 101030214.

News and upcoming events

| Jul 2025 |

Self-Similar Cosmic-Ray Transport in High-Resolution MHD Turbulence

Last year we received another DOE INCITE award

on Pushing the Frontier of Cosmic Ray Transport in Interstellar Turbulence

(PI D. Fielding).

The first results from these simulation have now been submitted to the Astrophysical Journal Letters

Kempski et al. arXiv:2507.10651.

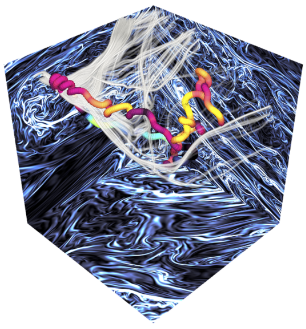

By propagating cosmic ray particles through an MHD turbulence simulation

(with 102403 cells so that the Alfven scale is well resolved), we show that

that sharp bends in the magnetic field are key mediators of particle transport even on small scales via

resonant curvature scattering and that particle scattering in the turbulence shows strong hints of self-similarity.

This suggests that large-amplitude MHD turbulence can provide efficient scattering over a wide range of CR energies.

Fore more details check out the paper.

Last year we received another DOE INCITE award

on Pushing the Frontier of Cosmic Ray Transport in Interstellar Turbulence

(PI D. Fielding).

The first results from these simulation have now been submitted to the Astrophysical Journal Letters

Kempski et al. arXiv:2507.10651.

By propagating cosmic ray particles through an MHD turbulence simulation

(with 102403 cells so that the Alfven scale is well resolved), we show that

that sharp bends in the magnetic field are key mediators of particle transport even on small scales via

resonant curvature scattering and that particle scattering in the turbulence shows strong hints of self-similarity.

This suggests that large-amplitude MHD turbulence can provide efficient scattering over a wide range of CR energies.

Fore more details check out the paper.

| |

| Jul 2025 |

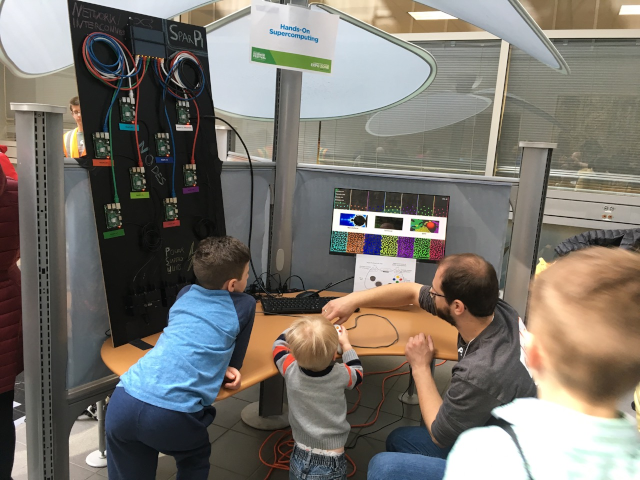

Sternstunden Festival: this year with a science slam

After the big success of the Sternstunden Festival

in previous years, this year's edition of the two-days joint astro-music event

(between the Hamburg Observatory and University Music) received another science activity: a science slam.

I took the opportunity a presented What we shot into space - and what came back?, which

was well received by the audience.

In addition, we also conducted our interactive supercomputer exhibit and virtual reality experience

from last year again.

After the big success of the Sternstunden Festival

in previous years, this year's edition of the two-days joint astro-music event

(between the Hamburg Observatory and University Music) received another science activity: a science slam.

I took the opportunity a presented What we shot into space - and what came back?, which

was well received by the audience.

In addition, we also conducted our interactive supercomputer exhibit and virtual reality experience

from last year again.

| |

| Mar 2025 |

DFG project funded

Last year I submitted a proposal for an individual research grant to the DFG (German Research Foundation) on Energy dynamics in diffuse astrophysical plasmas: multiscale, multiphase, and multiphysics. The proposal got favorable reviews and I will receive funding that covers my position for the next three years starting in June this year. | |

| Feb 2025 |

First XMAGNET results

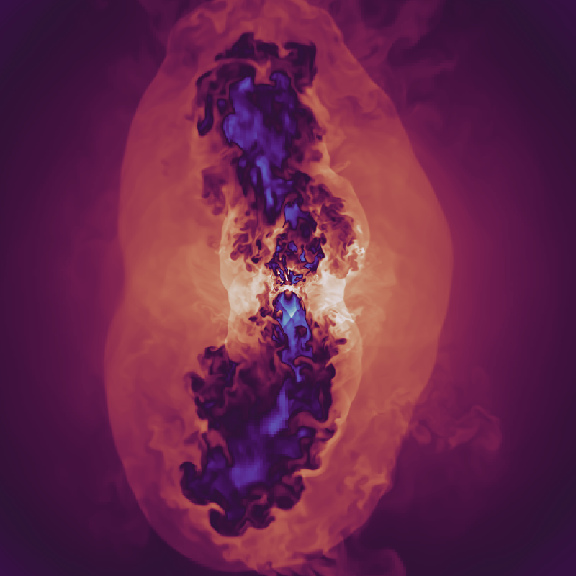

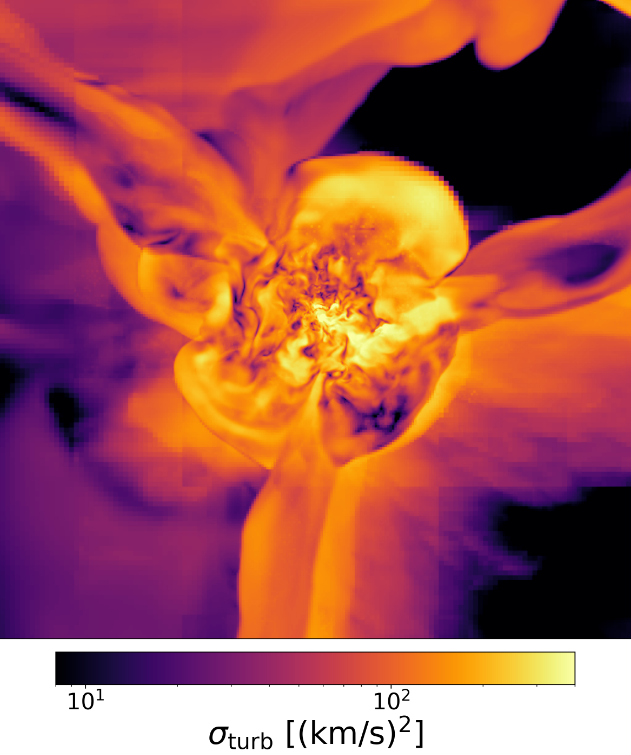

After several years of code development, running simulations, and analyzing them, we

finally submitted the first results from the XMAGNET

(“eXascale simulations of Magnetized AGN feedback focusing on Energetics and Turbulence”)

project.

The project was supported through a DOE INCITE

award and conducted on the first TOP500 exascale supercomputer Frontier.

After several years of code development, running simulations, and analyzing them, we

finally submitted the first results from the XMAGNET

(“eXascale simulations of Magnetized AGN feedback focusing on Energetics and Turbulence”)

project.

The project was supported through a DOE INCITE

award and conducted on the first TOP500 exascale supercomputer Frontier.

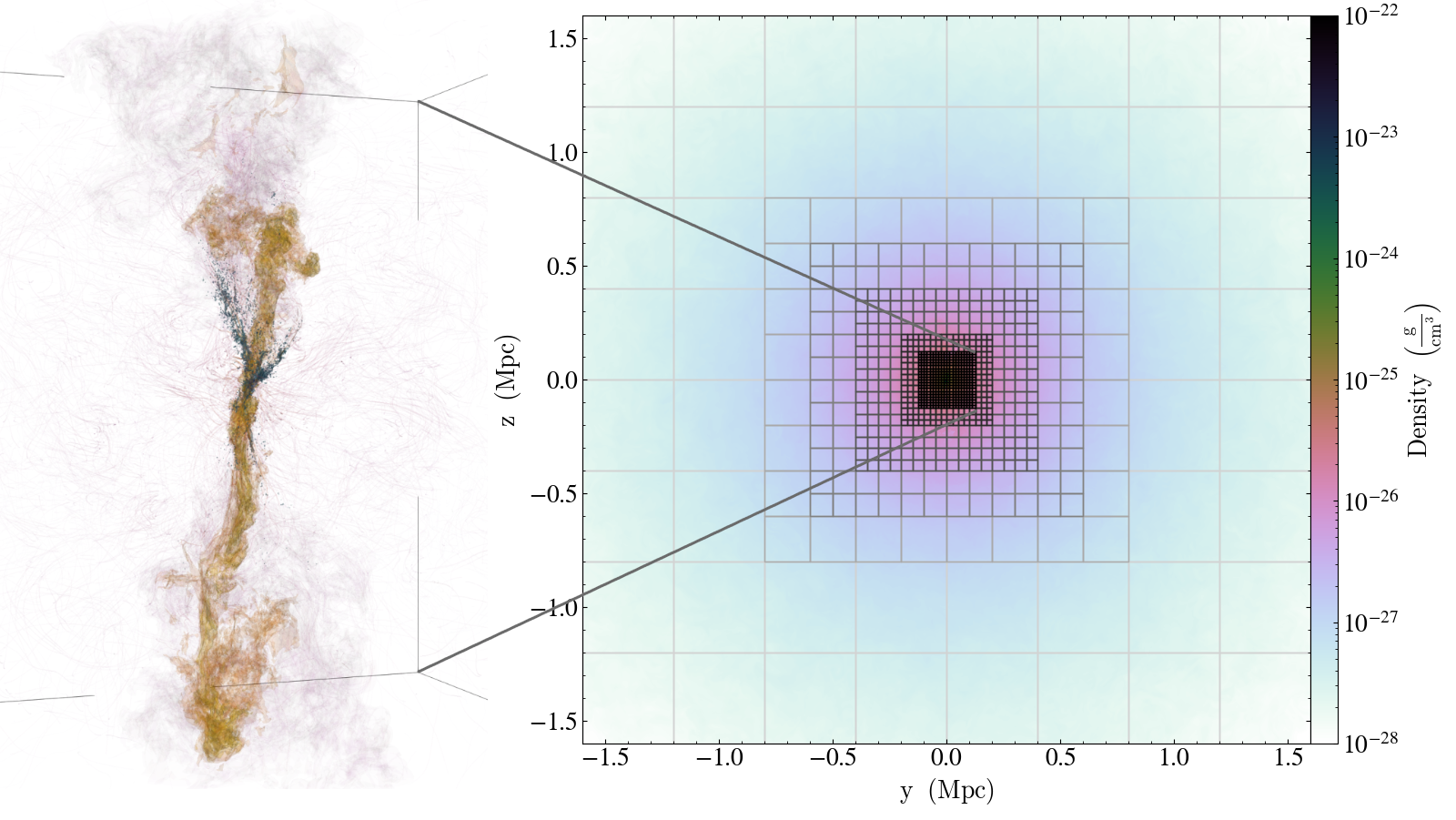

One unique aspect of these simulations is that the central region (a cube with 256 kpc side length) is covered by a uniform grid with 100 pc cell size. The resulting 25603 box is ideal to study turbulence without artifacts from varying resolution, which is particularly relevant for the dynamics of the multiphase plasma. Moreover, the computational resources allowed us to evolve various system for several Gyr so that we can study self-regulation with feedback from supernovae and supermassive black holes in great detail. Grete et al. ApJ (2025) serves as the overview paper introducing the model and simulations with an emphasis on a Perseus-like cluster. Fournier et al. A&A (2025) is a detailed analysis of the multiphase velocity structure functions and their comparison to observations | |

| Jan 2025 |

JUPITER here we come

After successful participation in the JUREAP program, we received an "Exascale Certificate", which

allowed us to apply for time in the GCS Exascale Pioneer Call.

Our proposal on Inertial range dynamics in the exascale era with the largest compressible magnetized turbulence simulation

was eventually selected as one of only a few lighthouse projects.

Therefore, we will get access to the pre-production machine allowing us to stress the

system while answering the question on asymptotic energy dynamics in MHD turbulence.

After successful participation in the JUREAP program, we received an "Exascale Certificate", which

allowed us to apply for time in the GCS Exascale Pioneer Call.

Our proposal on Inertial range dynamics in the exascale era with the largest compressible magnetized turbulence simulation

was eventually selected as one of only a few lighthouse projects.

Therefore, we will get access to the pre-production machine allowing us to stress the

system while answering the question on asymptotic energy dynamics in MHD turbulence.

| |

| Jul 2024 |

Sternstunden Festival: Interactive supercomputing and virtual reality

After the big success of the Sternstunden Festival

last year, I got involved in this two-days joint astro-music event

(between the Hamburg Observatory and University Music) scientifically this year.

In addition to the interactive supercomputer model already used in the past, we also set up

two virtual reality stations where people could emerge themselves in a realistic depiction of

our solar system and galaxy (using Gaia Sky VR as well as in a simulation of the galactic center illustrating stellar winds (available on Stream for free).

After the big success of the Sternstunden Festival

last year, I got involved in this two-days joint astro-music event

(between the Hamburg Observatory and University Music) scientifically this year.

In addition to the interactive supercomputer model already used in the past, we also set up

two virtual reality stations where people could emerge themselves in a realistic depiction of

our solar system and galaxy (using Gaia Sky VR as well as in a simulation of the galactic center illustrating stellar winds (available on Stream for free).

It is also worth to check out the official aftermovie. | |

| Jun 2024 |

JUREAP - the JUPITER Research and Early Access Program

Next year JUPITER is going to be installed in Jülich as the first exascale supercomputer in Europe. In preparation, the JUPITER Research and Early Access Program (JUREAP) was launched to optimally prepare applications and users for JUPITER. I successfully applied to the program and will get access to JEDI (the JUPITER Exascale Development Instrument), which is a cabinet of the final system and currently the most energy efficient system on the Green500 list. | |

| Feb 2024 |

KITP Conference: Turbulence in the Universe

I am very happy that I got invited to give a talk at the KITP Conference on Turbulence in the Universe in Santa Barbara. This gave me the chance to present the latest results of our ongoing INCITE project and connect them to previous work on MHD turbulence. A recording of the talk MHD turbulence from idealized boxes to the ICM is available here as is the remainder of the full program in line with the KITP mission. | |

| Jan 2024 |

OLCF User Conference Call presentation

Every month the Oak Ridge Leadership Computing Facility (OLCF), who operates the first TOP500 exascale supercomputer Frontier, hosts a User Conference Call. This month I was invited to talk about First experiences at the exascale with Parthenon – a performance portable block-structured adaptive mesh refinement framework. I highlighted our performance-motivated key design decisions in developing Parthenon and shared our experiences and challenges in scaling up with an emphasis on handling the number of concurrent messages on the interconnect, writing large output files, and post-process them for visualization – which also translate to other applications. The recording and slides are available online. Paper update I am happy to share that the astrophysical application paper on Magnetic field amplification in massive primordial halos: Influence of Lyman-Werner radiation led by graduate student Vanesa Díaz was accepted for publication in Astronomy & Astrophysics. It is a follow-up of the Grete et al 2019 paper with an emphasis on varying the background radiation (which we previously held constant). The paper already available online. | |

| Nov 2023 |

SC23 Best Paper Award Nomination

The paper Frontier: Exploring Exascale submitted to Supercomputing 2023 by Atchley et al. was nominated for the Best Paper Award. Besides a general overview of Frontier and baseline benchmark results, it features AthenaPK as one of the early applications on Frontier that met the key performance parameters in scaling up from Summit. The published paper is available in the proceedings or through open access. | |

| Sep 2023 |

Successful QU Postdoc Grant for Sustainable Software Development

The Quantum Universe Cluster of Excellence at the University of Hamburg annually calls for proposals for a postdoc research grant. I applied earlier this year with a project on Sustainable software development. More specifically, I requested 10,000 EUR to buy a workstation with GPUs from different vendors. This machine should provide continuous integration and testing services supporting not only our own projects but also more broadly developers of GPU codes at the university. The ease of access to these resources will lower the threshold of adopting sustainable software development practices. After the first round of written proposal, I also presented the project in person. I am now happy to report that my proposal got selected and look forward to the machine arriving on campus. | |

| May 2023 |

CUG23 Best Paper Runner-up Award

We (J. K. Holmen, P. Grete, and V. G. Melesse Vergara) submitted a paper to the Cray User Group Meeting 2023 in Helsinki on Early Experiences on the OLCF Frontier System with AthenaPK and Parthenon-Hydro. While I attended the Athena++ Workshop in the Center for Computational Astrophysics, Flatiron Institute, to give an invited talk on AthenaPK, John Holmen presented our accepted paper at CUG23. It was well received and awarded the Best Paper Runner-up Award. Therefore, it will be published later this year in a special edition of the Concurrency and Computation Practice and Experience Journal. Please contact me if you are interested in a preprint. Earlier in April, we were invited to give a talk on Parthenon in the EE04: V: Exascale Computational Astrophysics session at the annual April Meeting of the American Physical Society in Minneapolis, MN. The talk presented by F. Glines is covered among the other talks by a more detailed press release. | |

| May 2023 |

Paper updates

Over the past months several projects came to a successful conclusion. As a matter of dynamical range — scale dependent energy dynamics in MHD turbulence by Grete, O'Shea, and Beckwith (see below) was accepted without any major revision for publication in The Astrophysical Journal Letters. The final full text (open access) can be found at doi:10.3847/2041-8213/acaea7. A morphological analysis of the substructures in radio relics by Wittor, Brüggen, Grete, and Rajpurohit was published in Monthly Notices of the Royal Astronomical Society. In both observations and simulations of radio relics we find that both the magnetic field and the shock front consist of filaments and ribbons that cause filamentary radio emission (i.e., it is not a projection effect of sheet-like structures). The full text (and associated code) can be found at doi:10.1093/mnras/stad1463 and arXiv:2305.07046. The Launching of Cold Clouds by Galaxy Outflows. V. The Role of Anisotropic Thermal Conduction by Brüggen, Scannapieco, and Grete was published in The Astrophysical Journal. It is the first (astro)physics application paper featuring simulations with AthenaPK for which we implemented a second-order accurate Runge–Kutta–Legendre super-time-stepping approach for diffusive fluxes to make these simulations feasible. The final full text (open access) can be found at doi:10.3847/1538-4357/acd63e. | |

| Apr 2023 |

On 27 April we welcomed over 20 girls from high schools at the Observatory for this year's Girlsday. In addition to projects on spectroscopy and radio observations, we also offered two on computing this year. First, using the supercomputer model I built for the Open Day (see below), we interactively demonstrated domain decomposition, load balancing and (the limits of) numerical algorithms. Second, using AthenaPK the participants were able to explore simulations using adaptive mesh refinement and even create their own input file to study the interaction of a blast wave with its surroundings, see video. PS: The video resolution is significantly higher than the small preview. Click on the full-screen button (bottom right) to enlarge. | |

| Dec 2022 |

INCITE award

I am happy to share that we (i.e., B. O'Shea, P. Grete, F. Glines, D. Prasad)

received an INCITE

award this year.

I am happy to share that we (i.e., B. O'Shea, P. Grete, F. Glines, D. Prasad)

received an INCITE

award this year.

Starting in Jan 2023, we will use the first TOP500 exascale supercomputer Frontier to study Feedback and energetics from magnetized AGN jets in galaxy groups and clusters using AthenaPK. The capabilities of Frontier will allow us to conduct the highest dynamical range studies of the diffuse intragroup and intracluster plasmas ever achieved, studying the accretion of gas onto supermassive black holes, the impact of the resulting AGN jets on the interstellar and circumgalactic plasma (cf., the illustration by D. Prasad on the right), and the relationship between these phenomena. We will also receive support for visualizing the results. Stay tuned for regular updates. | |

| Nov 2022 |

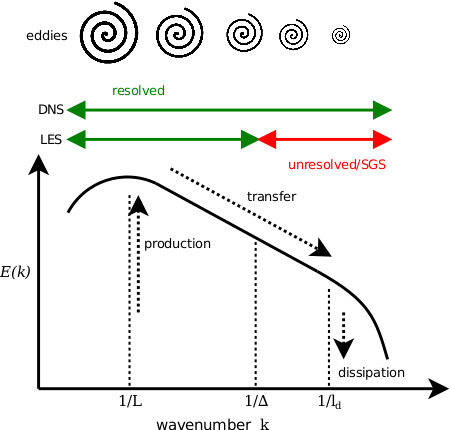

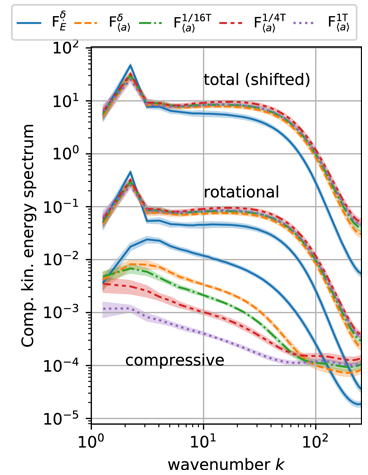

As a matter of dynamical range — scale dependent energy dynamics in MHD turbulence

I submitted our latest paper in the (what now can probably be called)

As a matter of ... series.

While the paper itself is not too long, we present two important results.

I submitted our latest paper in the (what now can probably be called)

As a matter of ... series.

While the paper itself is not too long, we present two important results.

First, contrary to hydrodynamic turbulence cross-scale (i.e., from large to small scales) energy fluxes are neither constant with respect to the mediator (such as advection or magnetic tension) nor with respect to dynamical range (or Reynolds number). Given that we do not observe any indication of convergence, this calls into question whether an asymptotic regime in MHD turbulence exists, and, if so, what it looks like, which is highly relevant to the modeling community (and, thus, to interpreting observations). Second, we demonstrate how to measure numerical dissipation in implicit large eddy simulations (ILES, such as shock-capturing finite volume methods) and that ILES correspond to direct numerical simulations (DNS) with equivalent explicit dissipative terms included. The full text of the submitted version is available on the arXiv:2211.09750 as usual. | |

| Oct 2022 |

Parthenon has a new home and first developer meeting

In early October we organized the first (excluding the kickoff meeting in Jan 2020) larger Parthenon developer meeting. More than 20 people from 7 different institutions attended in person. We had a very productive week with many mid- to long-term strategic discussions. The results and plans forward can be found in various GitHub issues. One non-technical result is that Parthenon now has a new home, the Parthenon HPC Lab organization on GitHub. The organization now hosts the Parthenon code itself, but also various downstream codes including the parthenon-hydro miniapp and AthenaPK. | |

| Oct 2022 |

Open Day at the Observatory

After a two year break an

Open Day

at the Observatory was finally possible again.

The program was packed with talks, Q&A sessions, guided tours, interactive

exhibitions, public observing, and more.

After a two year break an

Open Day

at the Observatory was finally possible again.

The program was packed with talks, Q&A sessions, guided tours, interactive

exhibitions, public observing, and more.

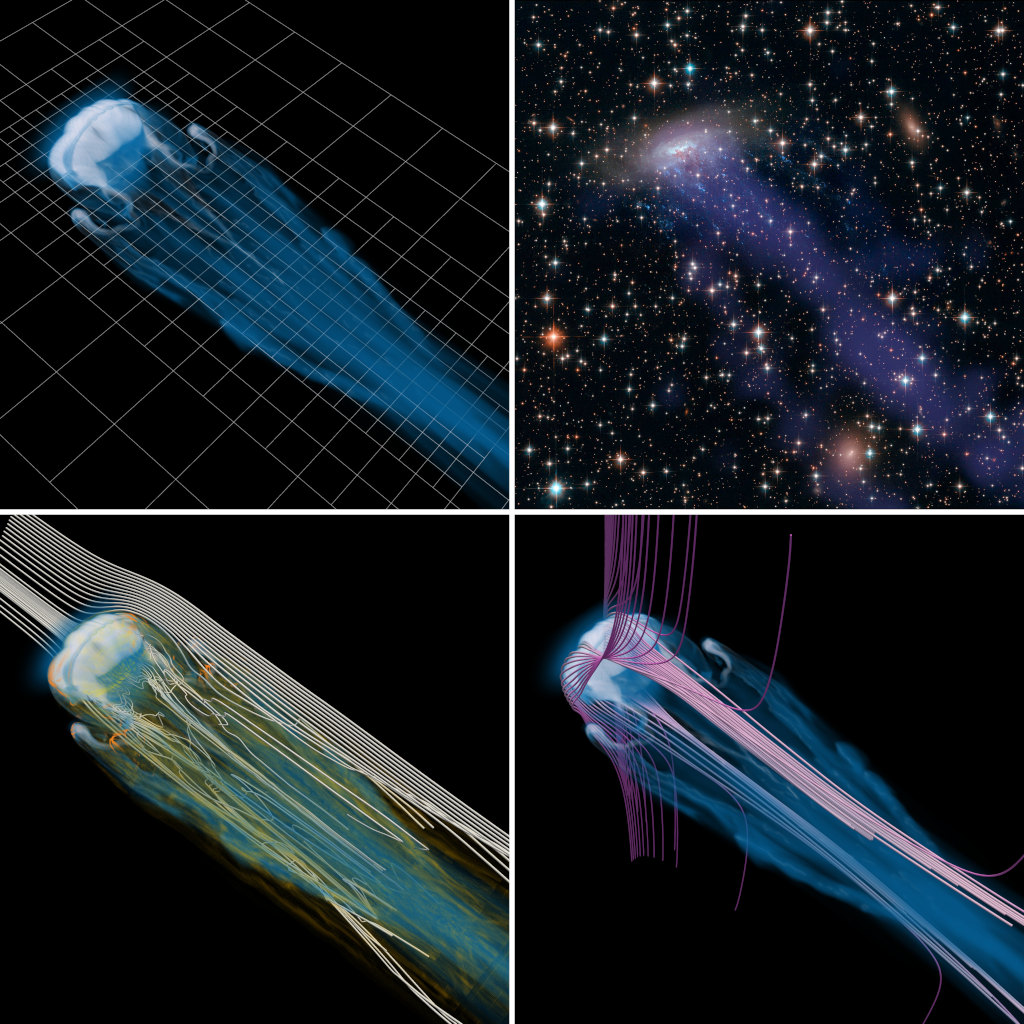

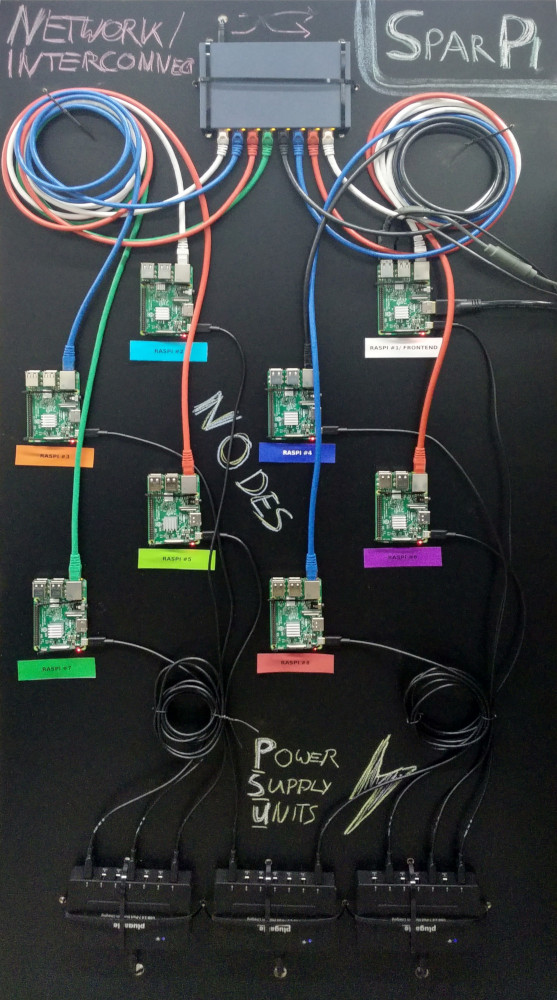

I built a new Rasperry Pi based supercomputer model similar to SparPi at MSU (see below) and used it for interactive demonstrations of simulations in astrophysics. Again, visitors of all ages enjoyed the various aspects of the setup ranging from controlling gravity (do things flow up if gravity is negative?) or viscosity (water or honey?) to concepts of parallel computing to how gas/plasma is treated as a fluid in many astrophysical simulations.  In preparation for the Open Day several generic pictures in the main building

were replaced by illustration of research conducted at the observatory.

In preparation for the Open Day several generic pictures in the main building

were replaced by illustration of research conducted at the observatory.

This includes an illustration of a current project between Marcus Brüggen, Evan Scannapieco, and I on cloud crushing simulations with magnetic fields and anisotropic (with respect to the local direction of the magnetic field) thermal conduction. The illustrations shows a volume rendering of the plasma density with the (adaptively refined) simulation grid (top left), velocity streamlines in gray and strong vortical motions in orange (bottom left), magnetic field lines that drape around the cloud (bottom right), and an observation of a "jellyfish" galaxy for reference (top right). A paper covering this project is in preparation. | |

| Sep 2022 |

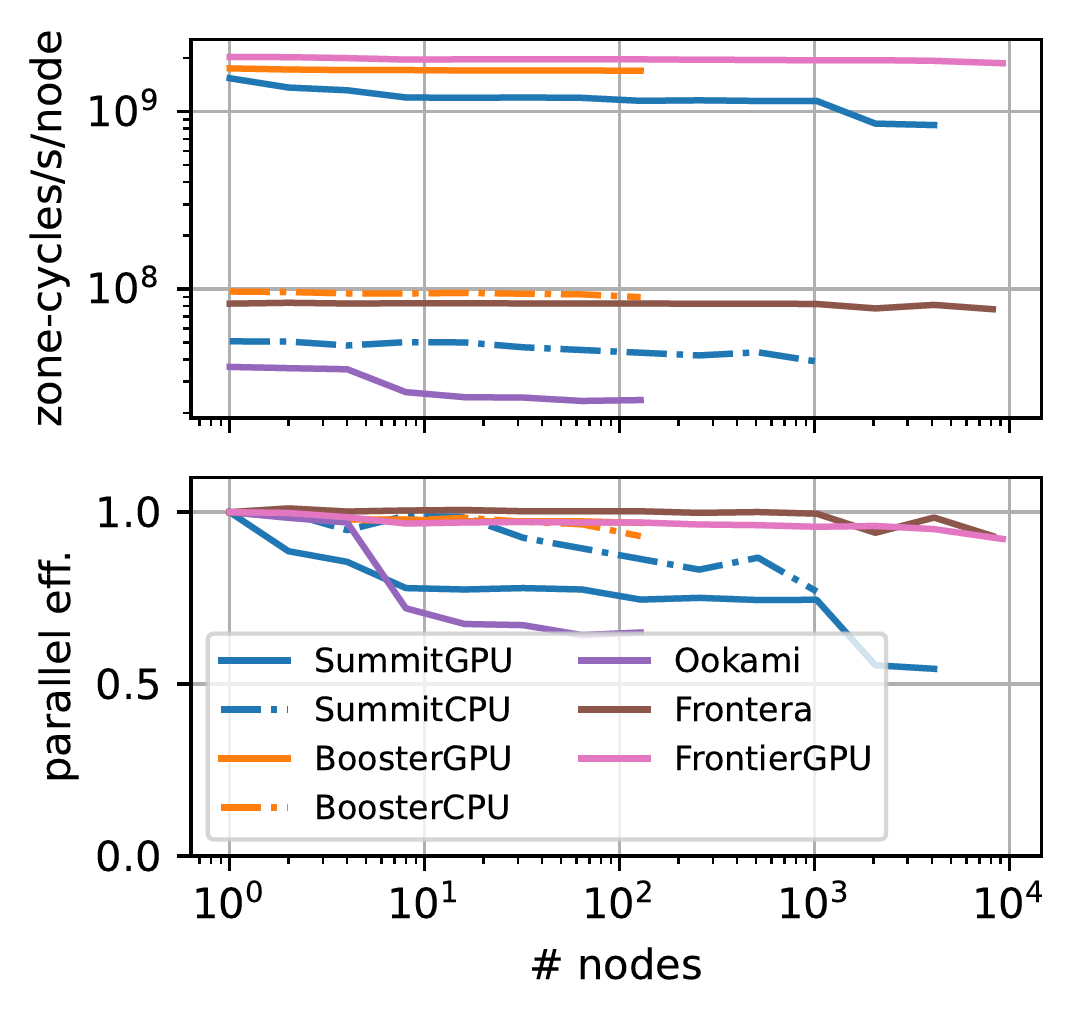

Parthenon runs at scale on the first TOP500 exascale supercomputer Frontier

Over the past months, I have been working with John Holmen at OLCF on using

the parthenon-hydro

miniapp in acceptance testing of

Frontier, the

first TOP500 exascale supercomputer.

Over the past months, I have been working with John Holmen at OLCF on using

the parthenon-hydro

miniapp in acceptance testing of

Frontier, the

first TOP500 exascale supercomputer.

Now I can finally share the results and am happy to report that we reached 92% weak scaling parallel efficient going from a single node all the way to 9,216 nodes. At that scale the simulation ran on 73,728 GPUs in parallel. The updated results including additional scaling plots are included in the revised version of the Parthenon paper. | |

| Sep 2022 |

Teaching paper published

In 2019 I took part in the Professional Development Program of the Institute for Scientist & Engineer Educators as design team leader targeting to develop an inquiry activity on Sustainable Software Practices with Collaborative Version Control. The module was implemented multiple times (both in person and online) and well received. Therefore, we decided to prepare a more formal write-up. The results are now published as Frisbie, R. L, Grete, P., & Glines, F. W. (2022). An Inquiry Approach to Teaching Sustainable Software Development with Collaborative Version Control. UC Santa Cruz: Leaders in effective and inclusive STEM: Twenty years of the Institute for Scientist & Engineer Educators. The article is open access and released under a CC-BY 4.0 license. | |

| Aug 2022 |

Conferences/workshops

After a long streak of virtual meetings, I was very happy to travel again and have a much more productive exchange with colleagues in person:

| |

| Jun 2022 |

Turbulence driver in AthenaPK

I finished porting the turbulence driver originally implemented in Athena and K-Athena to AthenaPK. As before the driver allows to control the ratio of compressive to solenoidal modes of the driving, the energy injection scales and power, and the correlation time of the stochastic process describing the evolution of the driving field. | |

| Apr 2022 |

GCS Large Scale project proposal accepted

Earlier this year I submitted a computing time proposal on The role of low collisionality in compressible, magnetized turbulence to support the my Marie Skłodowska-Curie Actions research. The proposal was well received and I got 7.5 million core-h on the JUWELS Booster Module for the simulations and 1 million core-h on the JUWELS Cluster Module CPU for post-processing. Over the course of May 2022 to April 2023 I will use the time to conduct and analyze simulations with anisotropic transport processes. Here, anisotropic refers to the transport of energy and momentum with respect to the local magnetic field direction. Texascale days - parthenon-hydro using 458752 ranks/cores I got the chance to conduct scaling tests of parthenon-hydro on TACC's Frontera supercomputer. At the large scale I ran a simulation on 8192 node using almost half a milltion CPU cores (with one MPI rank per core). At this scale parthenon-hydro still reached 93% weak scaling parallel efficiency (compared to running on a single node). The more detailed results will be included in revision of the Parthenon paper. | |

| Mar 2022 |

Second-order, RKL-based operator split super timestepping in AthenaPK

Parabolic terms (like the ones describing thermal conduction or viscosity/momentum diffusion) can put a severe constraint on the explicit timestep in a simulation. To circumvent this issue, various solutions exists including implicit and super timestepping methods where multiple subcycles are taken during one large (hyperbolic) timestep. The latter is particularly suitable for highly-parallelized, finite volume code like AthenaPK. For this reason, I have implemented the second-order accurate method by Meyer, Balsara, and Aslam (2014) in AthenaPK in preparation for running simulations with anisotropic transport processes. | |

| Feb 2022 |

Parthenon paper submitted

The Parthenon collaboration just submitted the first code paper describing the key features of Parthenon - a performance portable block-structured adaptive mesh refinement framework. The preprint is available on the arXiv:2202.12309. Key feature that are described in detail in the paper are

| |

| Jan 2022 |

parthenon-hydro miniapp public

I am happy to announce that parthenon-hydro is now available on GitHub at https://github.com/pgrete/parthenon-hydro. It is a finite volume, compressible hydrodynamics sample implementation using the performance portable adaptive mesh framework Parthenon and Kokkos. It is effectively a simplified version of AthenaPK demonstrating the use of the Parthenon interfaces (including the testing framework) with a use-case that is more complex than the examples included in Parthenon itself. | |

| Nov 2021 |

Conference presentations

Last month I got the opportunity to present a pre-recorded talk at the virtual RAS specialist meeting on Galactic magnetic fields: connecting theory, simulations, and observations. My short talk on As a Matter of Tension: Kinetic Energy Spectra in MHD Turbulence is now online and available on YouTube. This month I also attended the virual IAU Symposium 362 on The predictive power of computational astrophysics as discovery tool and gave a presentation on Performance Portable Astrophysical Simulations: Challenges and Successes with K-Athena (MHD) and AthenaPK/Parthenon (AMR). | |

| Oct 2021 |

Welcome Hamburg!

This month I officially started my Marie Skłodowska-Curie Actions Postdoctoral Fellowship at the Hamburg Observatory. I will study processes in weakly collisional plasmas as they are found, for example, in the intracluster medium with a specific emphasis on effects from anisotropic transport processes such as thermal conduction and viscosity. To this end, I am in the process of implementing the necessary numerical algorithms in AthenaPK allowing for detailed simulations on current and next generation supercomputers. | |

| Aug 2021 |

Turbulence in the intragroup and circumgalactic medium

Our latest paper by W. Schmidt, J. P. Schmidt and P. Grete has been accepted for

publication in Astronomy & Astrophysics, see preprint on arxiv:2107.12125.

Our latest paper by W. Schmidt, J. P. Schmidt and P. Grete has been accepted for

publication in Astronomy & Astrophysics, see preprint on arxiv:2107.12125. We conduct and analyze cosmological zoom-in simulations of dark-matter filaments. One key result is that the scaling law between the turbulent velocity dispersion and thermal energy observed in massive objects, such as galaxy clusters, also extends to lower-mass objects down to the CGM of individual galaxies. Update (Oct 2021): The paper has now been published, see doi:10.1051/0004-6361/202140920. | |

| Jun 2021 |

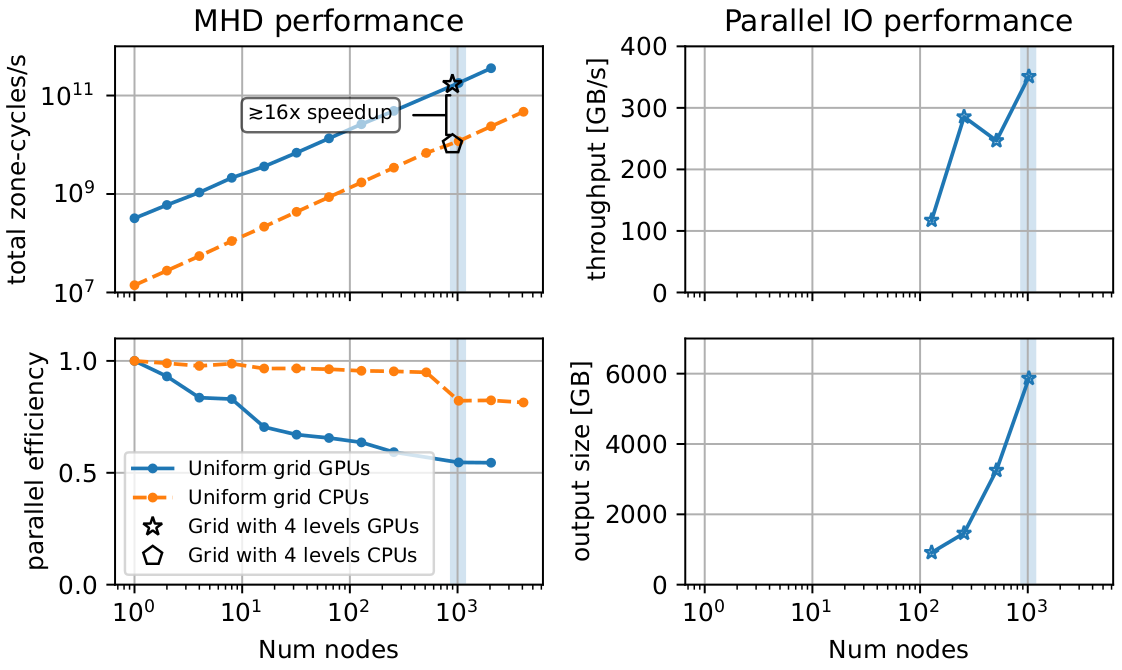

First AthenaPK scaling results

I recently conducted scaling tests on

Summit

up to 12288 GPUs (2048 nodes) using AthenaPK.

While the parallel efficiency is not as good as with K-Athena (yet!), the results

are nevertheless very encouraging.

Using the GPUs still results in a ≥16x speedup compared to using all CPU cores.

Moreover, this also pertains to simulations with mesh refinement, which are not supported

by K-Athena on GPUs.

I recently conducted scaling tests on

Summit

up to 12288 GPUs (2048 nodes) using AthenaPK.

While the parallel efficiency is not as good as with K-Athena (yet!), the results

are nevertheless very encouraging.

Using the GPUs still results in a ≥16x speedup compared to using all CPU cores.

Moreover, this also pertains to simulations with mesh refinement, which are not supported

by K-Athena on GPUs.

Finally, I also tested parallel IO at scale using Parthenon's parallel HDF output. Again, the results were very encouraging. Even writing files of 6TB size at approx. 350GB/s did not cause any issues. | |

| Apr 2021 |

Latest papers published

The latest two papers are now available on the homepage of the respective publishers:

Despite hitting some roadblocks in the process of adapting to a new supercomputer in the first year, our Leadership Resource Allocation (LRAC) on Frontera has been renewed for a second year. I will continue to run simulations to study the role of low collisionality in compressible, magnetized turbulence with an emphasis on the hot, dilute plasma found in the Intracluster medium. MHD support in AthenaPK Over the past two months many new features have been added to AthenaPK. This includes first, second and third order hydrodynamics as well as support for magnetohydrodynamics simulations using the generalized Lagrange multiplier, divergence cleaning approach by Dedner et al. 2002, see README in the repository for a complete list of supported features. As before, AthenaPK follows a "device first" design in order to achieve high performance. In other words, there are no explicit data transfers between the host and devices (such as as GPUs) because of the comparatively slow data transfer in between. Finally, a Parthenon team team applied and was accepted to the Argonne GPU Hackathon 2021. Over the course of several days we will work with mentors on improving the overall performance with an emphasis on load balancing and mesh refinement on GPUs. | |

| Feb 2021 |

Marie Skłodowska-Curie Actions

I am happy to share that my proposal on Unraveling effects of anisotropy from low collisionality in the intracluster medium will receive funding under the Marie Skłodowska-Curie Actions. Later this year I will move to Hamburg and work with Marcus Brüggen at the Hamburg Observatory. AthenaPK is public I am also happy to share the (magneto)hydrodynamics code on top of Parthenon is now publicly available. While it is still in development, second order hydrodynamics with mesh refinement on devices (such as GPUs) is working. More details can be found in the repository. As with Parthenon, we welcome any questions, feedback, and/or would be happy to hear if you want to directly get involved. | |

| Jan 2021 |

Happy New Year!

I am happy to report that our development code AthenaPK is now capable of running second-order, compressible hydrodynamics with adaptive mesh refinement (AMR) fully on GPUs (see able movie). AthenaPK will implement the (magneto)hydrodynamic methods of Athena++ on top of the performance portable AMR framework Parthenon and Kokkos. We are currently working on further improving AMR performance for small block sizes. Stay tuned for further updates. | |

| Sep 2020 |

Research update

We recently submitted two papers:

| |

| Jul 2020 |

Splinter Meeting on Computational Astrophysics

I am co-organizing the splinter meeting on Computational Astrophysics as part of the virtual German Astronomical Society Meeting Sep 21-25 2020. Abstract submission is open until 15 August. The general meeting registration is open until 15 September and there is no registration fee. Splinter abstract: Numerical simulations are a key pillar of modern research. This is especially true for astrophysics where the availability of detailed spatial and temporal data from observations is often sparse for many systems of interest. In many areas large-scale simulations are required, e.g., in support of the interpretation of observations, for theoretical modeling, or in the planning of experiments and observation campaigns. The need and and relevance of large-scale simulations in astrophysics is reflected in a significant share of 25-30% of the overall German supercomputing time. While the supercomputing landscape has been stable for a long time, it started to change in recent years on the path towards the first exascale supercomputer. New technologies such as GPUs for general purpose computing, ARM based platforms (versus x86 platforms), and manycore systems in general have been introduced and require to rethink and revisit traditional algorithms and methods. This splinter meeting will bring together experts in computational astrophysics from all fields covering (but not limited to) fluid-based methods (from hydrodynamics to general relativistic magnetohydrodynamics), kinetic simulations, radiation transport, chemistry, and N-body dynamics applied to astrophysical systems on all scales, e.g., supernovae, planetary and solar dynamos, accretion disks, interstellar, circumgalactic, and intracluster media, or cosmological simulations. The goal of this meeting is to present and discuss recent developments in computational astrophysics and their application to current problems. Thus, contributions involving large-scale simulations and new methods/algorithms are specifically welcome. In addition to astrophysical results obtained from simulations, speakers are also encouraged to highlight numerical challenges they encountered and how they addressed those in their codes. These may include, but are not limited to, new algorithms (e.g., higher-order methods), changing HPC environments (e.g., manycore, GPUs, or FPGAs), or data storage (e.g., availability of space, sharing, or long term retention). K-Athena paper accepted for publication After a revision that primarily addressed details in the roofline analysis, the K-Athena paper has been accepted for publication in IEEE Transactions on Parallel and Distributed Systems. Please find the final (early access) version at doi:10.1109/TPDS.2020.3010016 as well as an updated preprint on the arXiv:1905.04341. | |

| Apr 2020 |

Postdoctoral Excellence in Research Award

I am happy to report that I am one recipient of this year's MSU Postdoctoral Excellence in Research Award. This annual award for two postdocs at MSU from all disciplines is provided by the Office of the Senior Vice President for Research and Innovation and the MSU Office of Postdoctoral Affairs and recognizes exceptional contributions to MSU and the greater research community. I will give a brief presentation of my work during the (this year virtual) reception mid May. | |

| Mar 2020 |

Leadership Resource Allocation on Frontera

| |

| Feb 2020 |

Research update

| |

| Nov 2019 |

Research update

Our recent work on non-isothermal MHD turbulence that I presented both in Los Alamos in October and at the Cosmic turbulence and magnetic fields : physics of baryonic matter across time and scales meeting in Cargèse in November has been accepted for publication in The Astrophysical Journal (official link will follow once available). Moreover, the paper on internal energy dynamics in compressible hydrodynamic turbulence has been accepted for publication in Physical Review E. Official reference: W. Schmidt and P. Grete Phys. Rev. E 100, 043116 and preprint arXiv:1906.12228 Finally, the K-Athena tutorial sessions I gave in Los Alamos were well received. Stay tuned for updates on the code developments. | |

| Oct 2019 |

The Enzo code paper

has been published in the The Journal of Open Source Software (JOSS).

This version includes the subgrid-scale model for compressible MHD turbulence I developed. JOSS is a developer friendly, open access journal for research software packages. (about JOSS) In addition to a code peer review (mostly focussing on improve the quality of the software submitted, e.g., with respect to documentation, functionality, or testing) each accepted submission is assigned a Crossref DOI that can be referenced to. This supports the recognition of the software developers and the overall significant (but still all too often underappreciated) contribution of open source software to science. | |

| Sep 2019 |

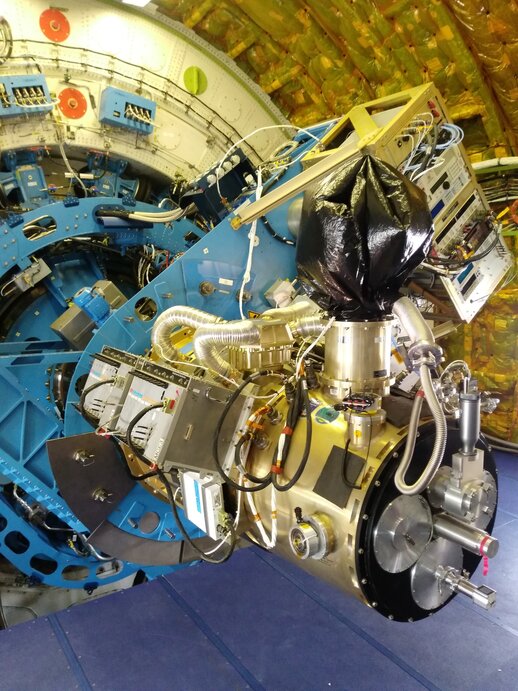

During the German Astronomical Society Meeting

in Stuttgart I had the chance to tour SOFIA and

see this impressive technical achievement in person.

SOFIA (Stratospheric Observatory for Infrared Astronomy) is a 2.7m telescope mounted on a plane so that observations can be made from high altitude (above most of the water vapor in the atmosphere, which absorbs infrared light).

In addition, I organized a Splinter Meeting on Computational Astrophysics during the conference. Many different topic were presented ranging from galaxy mergers, to star formation, to radiative transport methods. On top of the science presentations we also used the meeting to discuss current trends and developments in computational (astro)physics including GPU computing and FPGAs. | |

| Aug 2019 |

Research update

In addition to the work on K-Athena/performance portability several more physics focused project have reached maturity over the past months.

| |

| May 2019 |

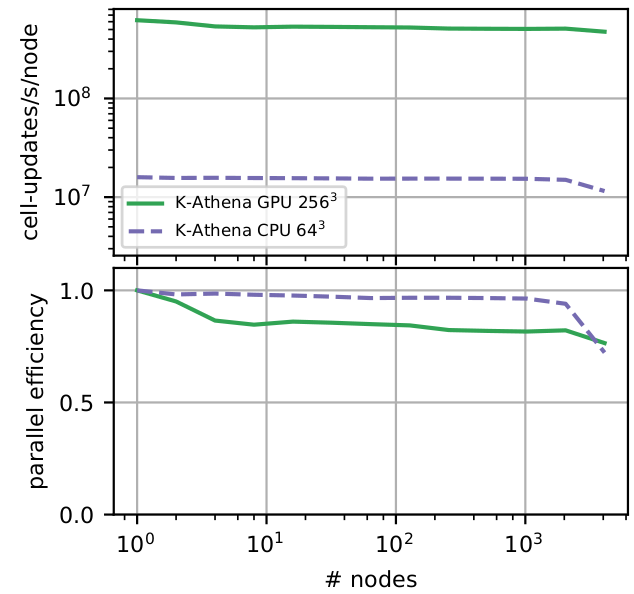

K-Athena is public!

Last year at the Performance Portability with Kokkos training we started to experiment with combining Kokkos with Athena++ in order to allow for GPU-accelerated simulations. The experiment is a complete success and we are happy to make the resulting K-Athena (= Kokkos + Athena++) code publicly available on GitLab. Details on the porting experience, roofline models on CPUs and GPUs, and scaling on many different architectures are presented in more detail in the accompanying code paper (arXiv preprint), which is currently under review. In the paper, we demonstrate that K-Athena achieves about 2 trillion (1012) total cell-updates per second for double precision MHD on Summit — currently the fastest supercomputer in the world. This translates to updating an MHD simulation with a resolution of 10,0003 twice per second! At about 80% parallel efficiency using 24,576 Nvidia V100 Volta GPUs on 4,096 nodes, K-Athena achieves a speedup of more than 30 compared to using all available 172,032 CPU cores. The code release has also been featured on Tech Xplore, and we encourage feedback and/or contribution to the code via the GitLab repository.

Image credit: Carlos Jones/ORNL CC-BY-2.0 | |

| Apr 2019 |

After I took part in the

Professional Development Program

of the Institute for Scientist & Engineer Educators last year for the first time,

I returned this year as a design team leader.

Our design team (consisting of Rachel Frisbie and Forrest Glines — two MSU graduate students) will develop an inquiry activity on Sustainable Software Practices with Collaborative Version Control. We will teach the activity for two REU (Research Experiences for Undergraduates) programs at MSU in the summer. If you are interested in the teaching material, please contact me. We are happy to share the material and experience. | |

| Mar 2019 |

Our review paper covering Kris Beckwith's invited talk at ICOPS 2018

on Correlations and Cascades in Magnetized Turbulence

(Beckwith, Grete, and O'Shea 2019)

has been published in IEEE Transactions on Plasma Science last month.

Similarly, I also gave several talks, e.g., in Budapest, Las Vegas, Berkeley, and UC Santa Cruz, covering our recent results on correlations, energy transfer, and statistics in adiabatic, compressible MHD turbulence. In addition, I presented first results on our GPU-enabled version of Athena++ using Kokkos. Stay tuned — more information will follow shortly. | |

| Dec 2018 |

Last year when I gave a talk at the MIPSE (Michigan Institute for Plasma Science and

Engineering) seminar in Ann Arbor I was also interviewed.

The interview is part of a MIPSE sponsored outreach program to capture some of the

importance (and excitement) of plasmas for students and the general public.

The short 3 minute clip is now online on YouTube. All interviews of the series can be found on the MIPSE YouTube channel. | |

| Aug 2018 |

Last month I took part in the

Performance Portability with Kokkos training at

Oak Ridge National Laboratory. The four-day training was organized by the Exascale Computing Project and covered the Kokkos programming model and library for writing performance portable code. In other words, code is only written once and can afterwards be compiled for different target architectures, e.g., CPUs or GPUs, automatically taking into account architecture specific properties such as the memory layout and hierarchy. We are currently implementing this approach and will use it for the next project. In addition, SparPi, the Raspberry Pi supercomputer model, had another appearance this month at Astronomy on Tap. I presented a talk on What’s so super in supercomputer? Theory & Practice including a live demonstration. After the presentation, people made ample use of the opportunity to get their hands-on model themselves and directly interact with the live simulation.

| |

| May 2018 |

Our latest paper on Systematic Biases in Idealized Turbulence Simulations has been

accepted for publication in The Astrophysical Journal Letters, see

Grete et al 2018 ApJL 858 L19.

Our latest paper on Systematic Biases in Idealized Turbulence Simulations has been

accepted for publication in The Astrophysical Journal Letters, see

Grete et al 2018 ApJL 858 L19. We show how the autocorrelation time of the forcing is intrinsically linked to the amplitude of the driving field, and how this affects the presence of (unphysical) compressive modes even in the subsonic regime. I also presented the results at

| |

| Apr 2018 |

The MSU's science festival was a great success.

We had a lot of interested people of all ages at our booth. The assembled supercomputer model featured all components of an actual supercomputer, e.g., power supply units, networking/interconnects, compute nodes, and a frontend. On the frontend we are running TinySPH, an interactive hydrodynamics code that allows for both illustrating high performance computing techniques such as dynamic load balancing, and illustrating physics by changing fluid parameters such as viscosity or density. In addition, we offered multiple virtual reality experiences including one that featured a magnetohydrodynamic turbulence simulation. Visitors were able to dive into a turbulent box and experience the rich and complex fluid structure interacting with highly tangled magnetic fields from within.

| |

| Feb 2018 |

I am very happy that I was accepted to participate in the

2018 Professional Development Program of the Institute for Scientist & Engineer Educators.

This inquiry based teaching training covers multiple workshops where the participants

collaboratively design an inquiry activity that will eventually be implemented in class later

this year.

The first workshop is the "Inquiry Institute" from March 25-28, 2018 in Monterey, CA.

In addition, I will attend SnowCluster 2018 - The Physics of Galaxy Clusters from March 18 - 23, 2018 in Salt Lake City where I present a | |

| Feb 2018 |

Parts for a Raspberry Pi based "supercomputer" arrived. We will use the system for outreach, e.g., to demonstrate high performance computing, and in class for hands-on tutorials. I am currently setting the system up, which consists of 8x Raspberry Pi 3 for a total of 32 cores with 8 GB main memory. The system will premiere at MSU's science festival. Visit our booth at the Expo on April 7 for Hands-on Supercomputing and multiple Virtual Reality experiences.

| |

| Nov 2017 |

I presented the latest the results on energy transfer in compressible MHD turbulence

| |

| Sep 2017 |

My PhD thesis on Large eddy simulations of compressible magnetohydrodynamic turbulence was

awarded with this year's

Doctoral Thesis Award by the

German Astronomical Society. I presented the work at the Annual Meeting of the German Astronomical Society . See official press release [pdf] (in German), and MSU press releases (in English) from the CMSE department and the College of Natural Science . | |

| Sep 2017 | Our article on Energy transfer in compressible magnetohydrodynamic turbulence has been accepted for publication. In the paper, we introduce a scale-by-scale energy transfer analysis framework that specifically takes into account energy transfer within and between kinetic and magnetic reservoirs by compressible effects. The paper appears as a featured article in the journal Physics of Plasmas. Moreover, the article is covered by an AIP Scilight. | |

| Sep 2017 | We (PI B. W. O'Shea, Co-PIs B. Côté, P. Grete, and D. Silvia) successfully obtained computing time through an XSEDE allocation. I will use the resources to study driving mechanisms in astrophysical systems. | |

| Aug 2017 | I took part in the Argonne Training Program on Extreme-Scale Computing. This intensive two-week training is funded by the DOE's Exascale Computing Project and allowed me to gain knowledge and hands-on experience on next-generation hardware, programming models, and algorithms. I can highly recommend this training to everyone involved in high-performance computing. | |

| Jun 2017 |

I presented first results of our energy transfer study for compressible MHD turbulence

and the method itself

|